|

A book by William H. Calvin UNIVERSITY OF WASHINGTON SEATTLE, WASHINGTON 98195-1800 USA |

|

HOW BRAINS THINK

A Science Masters book (BasicBooks in the US; to be available in 12 translations) copyright ©1996 by William H. Calvin |

|

Syntax as a Foundation of Intelligence

Humans have some spectacular abilities, compared to our closest cousins among the surviving apes — even the apes that share much of our social intelligence, reassuring touches, and abilities to deceive. We have a syntactic language capable of supporting metaphor and analogical reasoning. We’re always planning ahead, imagining scenarios for the future, and then choosing in ways that take remote contingencies into account. We even have music and dance. What were the steps in transforming a chimpanzeelike creature into a nearly human one? That’s a question which is really central to our humanity. There’s no doubt that syntax is what human levels of intelligence are mostly about — that without syntax we would be little cleverer than chimpanzees. The neurologist Oliver Sacks’s description of an eleven-year-old deaf boy, reared without sign language for his first ten years, shows what life is like without syntax:

There is thought to be a bioprogram, sometimes called Universal Grammar. It is not the mental grammar itself (after all, each dialect has a different one) but rather the predisposition to discover grammars in one’s surroundings — indeed, particular grammars, out of a much larger set of possible ones. To understand why humans are so intelligent, we need to understand how our ancestors remodeled the ape’s symbolic repertoire and enhanced it by inventing syntax.

|

|

Stones and bones are, unfortunately, about all that remain of our ancestors in the last four million years,

not their higher intellectual abilities. Other species branched off along the way, but they are no longer

around to test. We have to go back six million years before there are living species with whom we shared a

common ancestor: the nonhominid branch itself split about three million years ago into the chimpanzee

and the much rarer bonobo (the “chimpanzee of the Pygmies”). If we want a glimpse at ancestral

behaviors, they’re our best chance. Bonobos share more behavioral similarities with humans and they’re

also much better subjects for studying language than the chimps that starred in the 60s and 70s. Linguists have a bad habit of claiming that anything lacking syntax isn’t language. That, ahem, is like saying that a Gregorian chant isn’t music, merely because it lacks Bach’s use of the contrapuntal techniques of stretto, parallel voice leading, and mirror inversions of themes. Since linguistics confines itself to “Bach and beyond,” it has primarily fallen to the anthropologists, the ethologists, and the comparative psychologists to be the “musicologists,” to grapple with the problem of what came before syntax. The linguists’s traditional put-down of all such research (“It isn’t really language, you know”) is a curious category error, since the object of the research is to understand the antecedents of the powerful structuring that syntax provides. One occasionally gets some help from the ontogeny-recapitulates-phylogeny crib, but human language is acquired so rapidly in early childhood that I suspect a streamlining, one that thoroughly obscures any original staging, rather as freeways tend to obliterate post roads. The fast track starts in infants with the development of phoneme boundaries: prototypes become ”magnets” that capture variants. Then there’s a pronounced acquisitiveness for new words in the second year, for inferring patterns of words in the third (kids suddenly start to use past tense -ed and plural -s with consistency, a generalization that occurs without a lot of trial-and-error), and for narratives and fantasy by the fifth. It is fortunate for us that chimps and bonobos lack such fasttracking, because it gives us a chance to see, in their development, the intermediate stages that were antecedent to our powerful syntax.

|

|

Vervet monkeys in the wild use four different alarm calls, one for each of their typical predators. They

also have other vocalizations to call the group together or to warn of the approach of another group of

monkeys. Wild chimpanzees use about three dozen different vocalizations, each of them, like those of the

vervets, meaningful in itself. A chimp’s loud waa-bark is defiant, angry. A soft cough-bark is,

surprisingly, a threat. Wraaa mixes fear with curiosity (“Weird stuff, this!”) and the soft huu signifies

weirdness without hostility (“What is this stuff?”). If a waa-wraaa-huu is to mean something different than huu-wraaa-waa, the chimp would have to suspend judgment, ignoring the standard meanings of each call until the whole string had been received and analyzed. This doesn’t happen. Combinations are not used for special meanings. Humans also have about three dozen units of vocalization, called phonemes — but they’re all meaningless! Even most syllables like “ba” and “ga” are meaningless unless combined with other phonemes to make meaningful words, like “bat” or “galaxy.” Somewhere along the line, our ancestors stripped most speech sounds of their meaning. Only the combinations of sounds now have meaning: we string together meaningless sounds to make meaningful words. That’s not seen anywhere else in the animal kingdom. Furthermore, there are strings of strings — such as the word phrases that make up this sentence — as if the principle were being repeated on yet another level of organization. Monkeys and apes may repeat an utterance to intensify its meaning (as do many human languages, such as Polynesian), but nonhumans in the wild don’t (so far) string together different sounds to create entirely new meanings. No one has yet explained how our ancestors got over the hump of replacing one-sound/one-meaning with a sequential combinatorial system of meaningless phonemes, but it’s probably one of the most important transitions that happened during ape-to-human evolution.

|

|

The honeybee appears, at least in the context of a simple coordinate system, to have broken out of the

mold of one-sign/one-meaning. When she returns to her hive, she performs a “waggle dance” in a figure-8 that communicates information about the location of a food source that she has just visited.

The angle of the figure-8 axis points toward the food. The duration of the dance is proportional

to the distance from the hive: for example, at least in the traditional version of this story, three

loops around the figure-8 would suggest 60 meters away to the average Italian honeybee,

though 150 meters to a German one — a matter of genes rather than the company in which the

bee was been reared. Still, the linguists are not very impressed — in his Language and Species,

Derek Bickerton notes:

One psychologist’s dog, as I noted earlier, understands about 90 items; the 60 it produces don’t overlap very much in meaning with the receptive ones. A sea lion has learned to comprehend 190 human gestures — but it doesn’t gesture back with anything near the same productivity. Bonobos have learned an even greater number of symbols for words and can combine them with gestures to make requests. A gray parrot has learned, over the course of a decade, a 70-word vocabulary that includes 30 object names, seven colors, five shape adjectives, and a variety of other “words” — and can make requests with some of them. None of these talented animals is telling stories about who did what to whom; they’re not even discussing the weather. But it is clear that our closest cousins, the chimpanzee and the bonobo, can achieve considerable levels of language comprehension with the aid of skilled teachers who can motivate them. The most accomplished bonobo, under the tutelage of Sue Savage-Rumbaugh and her coworkers, can now interpret sentences it has never heard before — such as “Kanzi, go to the office and bring back the red ball” — about as well as a two-and-a-half-year-old child. Neither bonobo nor child is constructing such sentences, but they can demonstrate by their actions that they understand them. And comprehension comes first, production later, as language develops in children. I often wonder how many of the limited successes in ape language studies were merely a matter of insufficient motivation; perhaps teachers have to be good enough to substitute for the normal self-motivating acquisitiveness of the young child. Or if the limited successes were from not starting with very young animals. If a bonobo could somehow become motivated in its first two years to comprehend new words at a rate approaching that of the year-old child, might the bonobo then go on to discover patterning of words in the manner of the pre-syntax child? But have it happen slowly enough for us to see distinct stages preceding serious syntax, the ones obscured by the streamlined freeways provided by the present human genome?

|

|

All of this animal communicative ability is very impressive, but is it language? The term

“language” is used rather loosely by most people. First of all, it refers to a particular dialect

such as English, Frisian, and Dutch (and the German of a thousand years ago, from which each

was derived — and, further back, proto-Indo-European). But “language” also designates the

overarching category of communication systems that are especially elaborate. Bee researchers

use “language” to describe what they see their subjects doing, and chimpanzee researchers do

the same. At what point do animal symbolic repertoires become humanlike language? The answer isn’t obvious. Webster’s Collegiate Dictionary offers “a systematic means of communicating ideas or feelings by use of conventionalized signs, sounds, gestures, or marks having understood meanings” as one definition of language. That would encompass the foregoing examples. Sue Savage-Rumbaugh suggests that the essence of language is “the ability to tell another individual something he or she did not already know” which, of course, means that the receiving individual is going to have to use some Piagettian guessing-right intelligence in constructing a meaning. But humanlike language? Linguists will immediately say “No, there are rules!” They will start talking about the rules implied by mental grammar and questioning whether or not these rules are found in any of the nonhuman examples. That some animals such as Kanzi can make use of word order to disambiguate requests does not impress them. The linguist Ray Jackendoff is more diplomatic than most, but has the same bottom line:

A lot of people have taken the issue to be whether the apes have language or not, citing definitions and counter-definitions to support their position. I think this is a silly dispute, often driven by an interest either in reducing the distance between people and animals or in maintaining this distance at all costs. In an attempt to be less doctrinaire, let’s ask: do the apes succeed in communicating? Undoubtedly yes. It even looks as if they succeed in communicating symbolically, which is pretty impressive. But, going beyond that, it does not look as though they are capable of constructing a mental grammar that regiments the symbols coherently. (Again, a matter of degree — maybe there is a little, but nothing near human capacity.) In short, Universal Grammar, or even something remotely like it, appears to be exclusively human. What, if anything, does this dispute about True Language have to do with intelligence? Judging by what the linguists have discovered about mental structures and the ape-language researchers have discovered about bonobos inventing rules — quite a lot. Let us start simple.

|

|

Some utterances are so simple that fancy rules aren’t needed to sort out the elements of the message — most requests such as “banana” and “ give” in either sequence get across the message. Simple association suffices. But suppose there are two nouns in a sentence with one verb: how do we associate “dog boy bite” in any order? Not much mental grammar is needed, as boys usually don’t bite dogs. But “boy girl touch” is ambiguous without some rule to help you decide which noun is the actor and which is the acted upon. A simple convention can decide this, such as the subject-verb-object order (SVO) of most declarative sentences in English (“The dog bit the boy”) or the SOV of Japanese. In short word phrases, this boils down to the first noun being the actor — a rule that Kanzi probably has absorbed from the way that Savage-Rumbaugh usually phrases requests, such as “Touch the ball to the banana.” You can also tag the words in a phrase in order to designate their role as subject or object, either with conventional inflections or by utilizing special forms called case markings — as when we say “he” to communicate that the person is the subject of the sentence but “him” when he is the object of the verb or preposition. English once had lots of case markings, such as “ye” for subject and “you” for object, but they now survive mostly in the personal pronouns and who/whom. Special endings can also tip you off about a word’s role in the phrase, as when -ly suggests to you that “softly” modifies the verb and not a noun. In highly inflected languages, such markings are extensively used, making word order a minor consideration in identifying the role a word is intended to play in constructing the mental model of relationships.

|

|

The simpler ways of generating word collections, such as pidgins (or my tourist German),

are what the linguist Derek Bickerton calls protolanguage. They don’t utilize much in the way

of mental rules. The word association (“boy dog bite”) carries the message, perhaps with some

aid from customary word order such as SVO. Linguists would probably classify the ape

language achievements, both comprehension and production, as protolanguage. Children learn a mental grammar by listening to a language (deaf children by observing sign language). They are acquisitive of associations as well as new words, and one fancy set of associations constitutes the mental grammar of a particular language. Starting at about 18 months of age, children start to figure out the local rules and eventually begin using them in their own sentences. They may not be able to describe the parts of speech, or diagram a sentence, but their “language machine” seems to know all about such matters after a year’s experience. This biological tendency to discover and imitate order is so strong that deaf playmates may invent their own sign language (“home sign”) with inflections, if they aren’t properly exposed to one they can model. Bickerton showed that children invent a new language — a creole — out of the pidgin protolanguage they hear their immigrant parents speaking. A pidgin is what traders, tourists, and “guest workers” (and, in the old days, slaves) use to communicate when they don’t share a real language. There’s usually a lot of gesturing, and it takes a long time to say a little, because of all those circumlocutions. In a proper language with lots of rules (that mental grammar), you can pack a lot of meaning into a short sentence. Creoles are indeed proper languages: the children of pidgin speakers take the vocabulary they hear and create some rules for it — a mental grammar. The rules aren’t necessarily any of those they know from simultaneously learning their parents’ native languages. And so a new language emerges, from the mouths of children, as they quickly describe who did what to whom.

|

|

Which aspects of language are easy to acquire and which are difficult? Broad categories may

be the easiest, as when the child goes through a phase of designating any four-legged animal as

“doggie” or any adult male as “Daddy.” Going from the general to the specific is more

difficult. But some animals, as we have seen, can eventually learn hundreds of symbolic

representations. A more important issue may be whether new categories can be created that escape old ones. The comparative psychologist Duane Rumbaugh notes that prosimians (lorises, galagos, and so forth) and small monkeys often get trapped by the first set of discrimination rules they are taught, unlike rhesus monkeys and apes, both of which can learn a new set of rules that violates the old one. We too can overlay a new category atop an old one, but it is sometimes difficult: categorical perception (the pigeonholing mentioned earlier, in association with auditory hallucinations) is why some Japanese have such a hard time distinguishing between the English sounds for L and R. The Japanese language has an intermediate phoneme, a neighbor to both L and R. Those English phonemes are, mistakenly, treated as mere variants on the Japanese phoneme. Because of this “capture” by the traditional category, those Japanese speakers who can’t hear the difference will also have trouble pronouncing them distinctly. Combining a word with a gesture is somewhat more sophisticated than one-word, one-meaning — and putting a few words together into a string of unique meaning is considerably more difficult. Basic word order is helpful in resolving ambiguities, as when you can’t otherwise tell which noun is the actor and which the acted upon. The SVO declarative sentence of English is only one of the six permutations of those units, and each permutation is found in some human language. Some word orders are more frequently found than others, but the variety suggests that word order is a cultural convention rather than a biological imperative in the manner proposed for Universal Grammar. Words to indicate points in time (“tomorrow” or “before”) require more advanced abilities, as do words that indicate a desire for information (“What” or “Are there”) and the words for possibility (“might” or “could”). It is worthwhile noting what a pidgin protolanguage lacks: it doesn’t use articles like “a” or “the,” which help you know whether a noun refers to a particular object or simply the general class of objects. It doesn’t use inflections (-s, -ly, and the like) or subordinate clauses, and it often omits the verb, which is guessed from the context. Though they take time to learn, vocabulary and basic word order are nonetheless easier than the other rule-bound parts of language. Indeed, in the studies of Jacqueline S. Johnson and Elissa L. Newport, Asian immigrants who learn English as adults succeed with vocabulary and basic-word-order sentences but have great difficulty with other tasks — tasks that those who arrived as children easily master. At least in English, the who-what-where-when-why-how questions deviate from basic word order: “What did John give to Betty?” is the usual convention (except on quiz shows in which questions mimic the basic word order and use emphasis instead: “John gave what to Betty?”). Nonbasic word orders in English are difficult for those who immigrated as adults, and so are other long-range dependencies, such as when plural object names must match plural verbs, despite many intervening adjectives. Not only do adult immigrants commit such grammatical errors, but they can’t detect errors when they hear them. For example, the inflectional system of English alters a noun when it refers to a multiplicity (“The boy ate three cookie.” Is that normal English?) and alters a verb when it refers to past time (“Yesterday the girl pet a dog.” OK?). Those who arrived in the United States before the age of seven make few such recognition errors as adults, and there is a steady rise in error rate for adults who began learning English between the ages of seven and fifteen — at which age the adult error level is reached (I should emphasize that the linguists were, in all cases, testing immigrants with ten years exposure to English, who score normally on vocabulary and the interpretation of basic word order sentences). By the age of two or three, children learn the plural rule: add -s. Before that, they treat all nouns as irregular. But even if they had been saying “mice,” once they learn the plural rule they will begin saying “mouses” instead. Eventually they learn to treat the irregular nouns and verbs as special cases, exceptions to the rules. Not only are children becoming acquisitive of the regular rules at about the time of their second birthday but it also appears that the window of opportunity is closing during the school years. It may not be impossible to learn such things as an adult, but simple immersion in an English-language society does not work for adults in the way that it does for children aged two to seven. Whether you want to call it a bioprogram or a Universal Grammar, learning the hardest aspects of language seems to be made easier by a childhood acquisitiveness that has a biological basis, just as does learning to walk upright. Perhaps this acquisitiveness is specific to language, perhaps it merely looks for intricate patterns in sound and sight and learns to mimic them. A deaf child like Joseph who regularly watched chess games might, for all we know, discover chess patterns instead. In many ways, this pattern-seeking bioprogram looks like an important underpinning for human levels of intelligence.

|

|

A dictionary will define the word “grammar” for you as (1) morphology (word forms and

endings), (2) syntax (from the Greek “to arrange together” — the ordering of words into clauses

and sentences), and (3) phonology (speech sounds and their arrangements). But just as we

often use “grammar” loosely to refer to socially correct usage, the linguists sometimes go to the

opposite extreme, using overly narrow rather than overly broad definitions. They often use

“grammar” to specify just a piece of the mental grammar — all the little helper words, like

“near,” “above,” and “into,” that aid in communicating such information as relative position.

Whatever words like these are called, they too are quite important for our analysis of

intelligence. First of all, such grammatical items can express relative location (above, below, in, on, at, by, next to) and relative direction (to, from, through, left, right, up, down). Then there are the words for relative time (before, after, while, and the various indicators of tense) and relative number (many, few, some, the -s of plurality). The articles express a presumed familiarity or unfamiliarity (the for things the speaker thinks the hearer will recognize, a or an for things the speaker thinks the hearer won’t recognize) in a manner somewhat like pronouns. Other grammatical items in Bickerton’s list express relative possibility (can, may, might), relative contingency (unless, although, until, because), possession (of, the possessive version of -s, have), agency (by), purpose (for), necessity (must, have to), obligation (should, ought to), existence (be), nonexistence (no, none, not, un-), and so on. Some languages have verbal inflections that indicate whether you know something on the basis of personal experience or just at second hand. So grammatical words help to position objects and events relative to each other on a mental map of relationships. Because relationships (“bigger,” “faster,” and so forth) are what analogies usually compare (as in “bigger-is-faster”), this positioning-words aspect of grammar could also augment intelligence.

|

|

Syntax is a treelike structuring of relative relationships in your mental model of things which

goes far beyond conventional word order or the afore-mentioned “positioning” aspects of

grammar. By means of syntax, a speaker can quickly convey a mental model to a listener of

who did what to whom. These relationships are best represented by an upside-down tree

structure — not the sentence diagraming of my high school days but a modern version of

diagraming known as an X-bar phrase structure. Since there are now some excellent popular

books on this subject, I will omit explaining the diagrams here (Whew!). Treelike structure is most obvious when you consider subordinate clauses, as in the rhyme about the house that Jack built. (“This is the farmer sowing the corn/ That kept the cock that crowed in the morn/ ...That lay in the house that Jack built”). Bickerton explains that such nesting or embedding is possible because:

As Bickerton notes, a sentence is like

Linguists, however, would like to place the language boundary well beyond such sentence comprehension: in looking at animal experiments, they want to see sentence production using a mental grammar; mere comprehension, they insist, is too easy. Though guessing at meaning often suffices for comprehension, the attempt to generate and speak a unique sentence quickly demonstrates whether or not you know the rules well enough to avoid ambiguities. Yet that production test is more relevant to the scientist’s distinctions than those of the language-learner’s; after all, comprehension comes first in children. The original attempts to teach chimps the manual sign language of the deaf involved teaching the chimp how to produce the desired movements; comprehension of what the sign signified only came later, if at all. Now that the ape-language research has finally addressed the comprehension issue, it looks like more of a hurdle than anyone thought — but once an animal is past it, spontaneous production increases. Linguists aren’t much interested in anything simpler than real rules, but ethologists and the comparative and developmental psychologists are. Sometimes, to give everyone a piece of the action, we talk about languages plural, “language” in the sense of systematic communication, and Language with a capital L for the utterances of the advanced-syntax-using élite. All aid in the development of versatility and speed (and hence intelligence). While morphology and phonology also tell us something about cognitive processes, phrase structure, argument structure, and the relative-position words are of particular interest because of their architectural aspect — and that provides some insights about the mental structures available for the guessing-right type of intelligence.

|

|

How much of language is innate in humans? Certainly the drive to learn new words via

imitation is probably innate in a way that a drive to learn arithmetic is not. Other animals learn

gestures by imitation, but preschool children seem to average ten new words every day — a feat

that puts them in a whole different class of imitators. And they’re acquiring important social

tools, not mere vocabulary: the right tool for the job, the British neuropsychologist Richard

Gregory emphasizes, confers intelligence on its user — and words are social tools. So this drive

alone may account for a major increment in intelligence over the apes. There is also the drive of the preschooler to acquire the rules of combination we call mental grammar. This is not an intellectual task in the usual sense: even children of low-average intelligence seem to effortlessly acquire syntax by listening. Nor is acquisition of syntax a result of trial-and-error, because children seem to make fairly fast transitions into syntactic constructions. Learning clearly plays a role but some of the rigidities of grammar suggest innate wiring. As Derek Bickerton points out, our ways of expressing relationships (such as all those above/below words) are resistant to augmentation, whereas you can always add more nouns. Because of regularities across languages in the errors made by children just learning to speak, because of the way various aspects of grammar change together across languages (SVO uses prepositions such as “by bus,” SOV implies postpositions such as “bus by”), because of those adult Asian immigrants, and because of certain constructions that seem forbidden in any known language, linguists such as Noam Chomsky have surmised that something biological is involved — that the human brain comes wired for the treelike constructions needed for syntax, just as it is wired for walking upright:

|

|

If a young bonobo or chimpanzee had the two drives that young human children have — to

seek out words and discover rules — in sufficient intensity and at the right time in brain

development, would it self-organize a language cortex like ours and use it to crystallize a set of

rules out of word mixtures? Or is that neural wiring innate, there without the relevant

experience and simply unused if the drives or opportunities are missing? Either, it seems to me,

is consistent with the Chomskian claim. Universal Grammar might result from the

“crystallization” rules of the self-organization, arising just as “flashers” and “gliders” do from

cellular automata. And the way you experimentally distinguish between uniquely human innate wiring and input-driven crystallization is to push vocabulary and sentences on promising ape students, with clever motivation schemes attempting to substitute for the child’s untutored acquisitiveness. It is, I think, fortunate that the apes are borderline when it comes to having the linguists’ True Language, because by studying their struggles we might eventually glimpse the functional foundations of mental grammar. In the course of human evolution, the stepping-stones may have been paved over, overlain by superstructures and streamlined beyond recognition. Sometimes ontogeny recapitulates phylogeny (the baby’s attempts to stand up recapitulating the quadruped-to-biped phylogeny; the descent of the larynx in the baby’s first year partially recapitulating the ape-to-human changes). However, development happens so rapidly that you fail to see the reenactment of evolutionary progress. If we could see the transition to fancier constructions in bonobos, however, we might be able to discover what sorts of learning augment syntax, what other tasks compete and so hinder language, and what brain areas “light up” in comparison to those in humans. Besides the major implications for our view of what’s uniquely human, an understanding of ape linguistic foundations might help us teach the language-impaired, might even reveal synergies that would aid language learning and better guessing. It is only through the efforts of skilled teachers of bonobos that we’re likely to answer questions about the stepping-stones.

|

|

Syntax is what you use, it would appear, to make those fancier mental models, the ones

involving who did what to whom, why, when, and with what means. Or at least if you want to

communicate such an elaborate understanding, you’ll have to translate your mental model of

those relationships into the mental grammar of the language, which will then tell you how to

order or inflect the words so the listener can reconstruct your mental model. It might, of course,

be easier just to “think in syntax” in the first place. In that sense, we’d expect the augmentation

of syntax to result in a great augmentation of guessing-right intelligence. The name of the game is to recreate your mental model in the listener’s mind. The recipient of your message will need to know the same mental grammar, in order to decode the string of words into approximately the same mental understanding. So syntax is about structuring relationships between items (words, usually) in your underlying mental model, not about the surface of things — such as SVO or inflections, which are mere clues. Your job as a listener is to figure out what sort of tree will provide an adequate fit to the string of words you hear. (Imagine being sent the numerical values for a spreadsheet and having to guess the spreadsheet formulas needed to interrelate them!). The way this could work is that you try out a simple arrangement (actor, action, acted-upons, modifiers) and wind up with words left over. You try another tree, and discover that there are unfilled positions that can’t be left empty. You use those clues about tree structure provided by the speaker’s plurals and verbs — for example, you know that “give” requires both a recipient and an item given. If there is no word (spoken or implied) to fill a required slot, then you scratch that tree and go on to yet another. Presumably you try a lot of different trees simultaneously rather than seriatim, because comprehension (finding a good enough interpretation of that word string) can operate at blinding speed. In the end, several trees may fill properly with no words left over, so you have to judge which of the interpretations is most reasonable given the situation you’re in. Then you’re finished. That’s comprehension — at least in my (surely oversimplified) version of the linguists’ model.

|

|

Think in terms of a game of solitaire: you’re not finished until you have successfully

uncovered all the face-down cards — while following the rules about descending order and

alternate colors — and in some shuffles of the deck, it is impossible to succeed. You lose that

round, shuffle the deck, and try again. For some word strings, no amount of rearranging will

find a meaningful set of relationships — a story you can construct involving who did what to

whom. If someone utters such an ambiguous word string to you, they’ve failed an important

test of language ability. For some sentences generated by a linguistically competent human, you have the opposite problem: you can construct multiple scenarios — alternative ways of understanding the word string. Generally, one of the candidates will satisfy the conventions of the language or the situation better than others, thus becoming the “meaning” of the communication. Context creates default meanings for some items in the sentence, saving the speaker from producing a longer utterance. (Pronouns are such a shortcut.) The kind of formal rules of compositional correctness you learned in high school are, in fact, violated all the time in the incomplete utterances of everyday speech. But everyday speech suffices, because the real test is in whether you convey your mental model of who did what to whom to your audience, and the context will usually allow the listener to fill in the missing pieces. Because a written message has to function without much of the context, and without such feedback as an enlightened or puzzled look on the listener’s face, we have to be more complete — indeed, more redundant — when writing than when speaking, making fuller use of syntax and grammatical rules.

|

|

Linguists would like to understand how sentences are generated and comprehended in a

machinelike manner — what enables the blinding speed of sentence comprehension. I like to call

this “language machine” a lingua ex machina. That does, of course, invite comparison with the

deus ex machina of classical drama — a platform wheeled on stage (the god machine), from which

a god lectured the other actors, and more recently the term bestowed on any contrived

resolution of a plot difficulty. Until our “playwrighting” technique improves, our algorithms

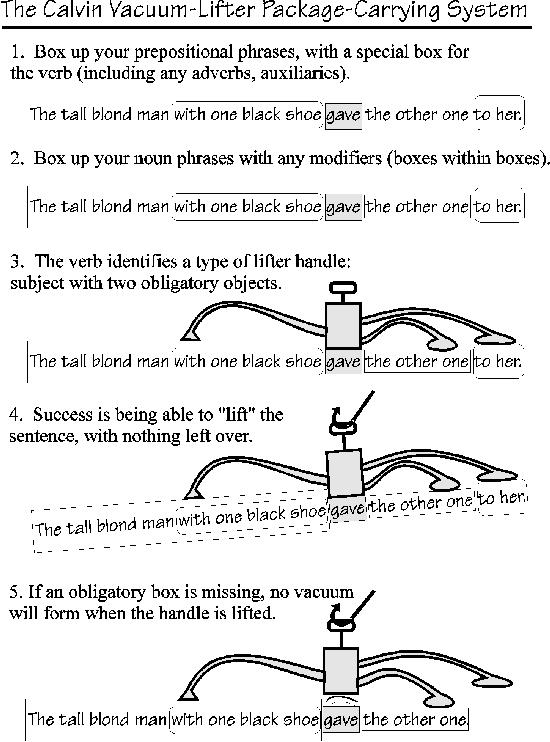

for understanding sentences will also seem contrived. I’m going to propose how one such lingua ex machina could work, combining phrase structure and argument structure in an algorithmic way. Linguists will probably find it at least as contrived as other diagraming systems. But here’s a few paragraphs’ worth of Calvin’s Vacuum-Lifter Package-Carrying System, involving processes as simple as those of a shipping department or production line.

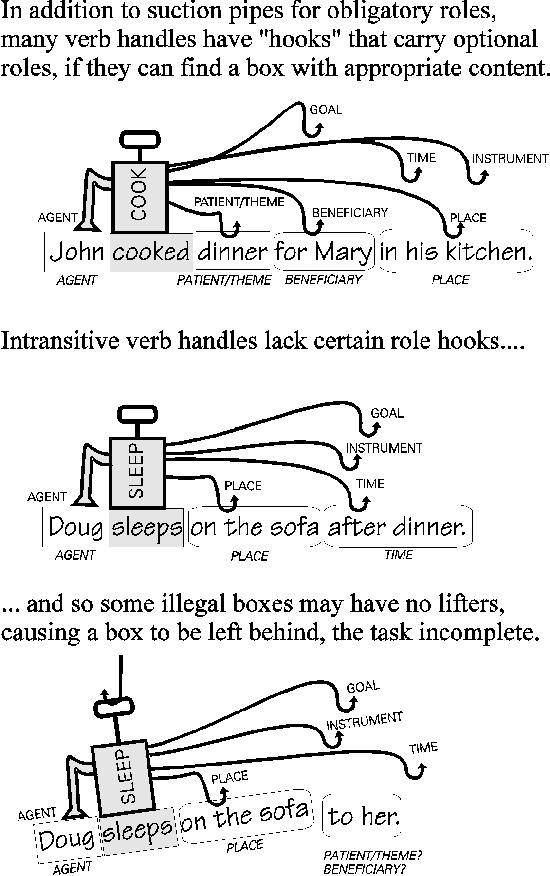

Let us say we have just heard or read a complete sentence, “The tall blond man with one black shoe gave the other one to her.” How do we make a mental model of the action? We need to box up some of the pieces, and prepositional phrases are a good place to start. Our machine knows all the prepositions and takes the nouns adjacent to them (the following noun if the sentence is English, the preceding noun if it’s in Japanese) into the same box. I’ll use boxes with rounded corners to indicate the packaging of phrases — “with one black shoe” and “to her.” On occasion, nonlinguistic memories have to be brought to bear in order to box things up correctly, as in that ambiguous phrase “the cow with the crumpled horn that Farmer Giles likes.” Knowing that Giles has a collection of horns over the fireplace could help you guess whether “that Farmer Giles likes” should be boxed with “cow” or with “crumpled horn.” Verbs get special boxes, because of the special role they play. Had there been an -ly word (an adverb), or an auxiliary, such as “must,” I would have boxed them up with the verb, even if they weren’t adjacent to it. Then we box up the noun phrases, incorporating any prepositional phrase boxes that modify them, so that we may have rounded boxes within rectangular boxes. If we have a nested phrase, it can function as a noun for purposes of the next boxing. Now we’ve got everything boxed up (there have to be at least two boxes but often there are more). Next we’ve got to “lift” them as a group and metaphorically carry this amalgamation away from the work space, understood at last. Will it get off the ground? There are a few different types of handles in my vacuum lifter machine, and the one we must use depends on the verb we identified (in this case, the past tense of “give”). There is another vacuum sucker, for the noun phrase-box containing the subject (I’ve drawn it as a little pyramid). You can’t have a sentence without both a subject and a verb, and if the subject is missing, air will be sucked in the opening, no vacuum will form, and the package lifter won’t lift. (That’s why I’ve used suckers here rather than hooks — to make a target obligatory.) But, as I noted earlier, “give” is peculiar, in that it requires two objects. (You can’t say, “I gave to her.” Or “I gave it.”). Therefore this lift handle has two additional suction lines. I’ve also allowed it some nonvacuum lines — simple strings with hooks, which can carry as many optional noun phrases and prepositional phrases, however many that the verb allows.

Sometimes the suction tips and optional hooks need some guidance to find an appropriate target: for example, SVO might help the subject tip find the appropriate noun phrase — as might a case marker, such as “he.” Other inflections help out, like gender or number agreement between verb and subject. The suction tips and the hooks could come with little labels on them for Beneficiary, Instrument, Negation, Obligation, Purpose, Possession, and so forth, mating only with words appropriate to those categories. Being able to lift the verb handle and carry all the packages, leaving none behind and with no unfilled suction tips, is what constitutes sentence recognition in this particular grammar machine. If a suction tip can’t find a home, no vacuum develops when you lift the handle, and your construction isn’t carried away. There’s no sense of completion. As noted, each verb, once identified by the lingua ex machina, has a characteristic handle type: for instance, handles for intransitive verbs such as “sleep” have only the one suction tip for the subject, but they have optional hooks, in case there are any extra phrases to be hauled along. “Sleep” will support optional roles such as Time (“after dinner”) and Place (“on the sofa”) — but not Recipient. There’s usually a vacuum tip for an Agent (though sometimes there isn’t an Agent — say, in sentences like “The ice melted”), perhaps other role-related suction tips, and some hooks for other possible roles in the verb’s storytelling repertoire. And, of course, the same boxes-inside-boxes principle that allowed a prepositional phrase to serve as a noun can allow us to have sentences inside sentences, as in dependent clauses or “I think I saw....”

|

|

That’s the short version of the my package-carrying system. If it seems worthy of Rube

Goldberg, remember that he’s the patron saint of evolution. I assume that, just as in a roomful of bingo players, many attempts at a solution are made in parallel, with multiple copies of the candidate sentence superimposed on different prototypical sentence scaffolds, and that most of these arrangements fail because of leftover words and unfilled suction tips. The version whose verb handle lifts everything shouts “Bingo!” and the deciphering game is over (unless, of course, there’s a tie). Being able to lift everything is simply a test of a properly patterned sentence; note that, once lifted successfully, sequence and inflections no longer matter, because roles have been assigned. This lingua ex machina would lift certain kinds of nonsense — such as Chomsky’s famous example, “Colorless green ideas sleep furiously” — but would, appropriately, fail to lift a nonsentence, such as “Colorless green ideas sleep them.” (The “sleep” verb handle has no hooks or suckers for leftover Objects.) Though a sensible mental model of relationships may be the goal of communication, and ungrammatical sentences cannot be deciphered except through simple word association, grammatical patterns of words can nonetheless be generated that fit sentence expectations but have no reasonable mental model associated with them. The test of semantics is different than the test of grammar. Semantics is also the tie-breaker, deciding among multiple winners, in somewhat the same way as boxing matches without a knockout are decided on judges’ points. That’s also how we guess what Farmer Giles is likely to like, the cow or the horn.

|

|

While each sentence is a little story, we also build string-based conceptual structures far

larger than sentences. They too have lots of obligatory and optional roles to fill. They come

along in the wake of grammar, as the writer Kathryn Morton observes:

By borrowing the mental structures for syntax to judge other combinations of possible actions, we can extend our plan-ahead abilities and our intelligence. To some extent, this is done by talking silently to ourselves, making narratives out of what might happen next, and then applying syntax-like rules of combination to rate (a decision on points, again) a candidate scenario as dangerous nonsense, mere nonsense, possible, likely, or logical. But our intelligent guessing is not limited to language-like constructs; indeed, we may shout “Eureka!” when a set of mental relationships clicks into place, yet have trouble expressing this understanding verbally for weeks thereafter. What is it about human brains that allows us to be so good at guessing complicated relationships?

|

|| amazon.com listing || End Notes for this chapter || To Table of Contents || To NEXT CHAPTER

You are reading HOW BRAINS THINK.

You are reading HOW BRAINS THINK.

The paperback US edition |